Today is New Year’s Day, a day typically devoted to hangovers and making resolutions.

I recently saw a presentation about automotive computer forensics that made me think about New Year’s resolutions. In spite of my background in computer forensics, I had not considered that automotive computers were advanced enough to conduct forensic investigations on. I enjoyed the presentation and I seriously considered taking the class even though it would not advance my career in Texas.

But then the instructor ruined the class for me by doing two things.

The first was when the presenter—an instructor for a world-famous IT school—talked about driving his yellow muscle car at 65 MPH in a 15-MPH school zone and getting a ticket.

Does he use the ticket as an agent of change? Take his punishment? Learn to drive his car on a racetrack?

No, he was standing up there bragging about his yellow car and getting away with driving fast in a school zone. He is just like those rich-and-powerful gropers that have been lately in the news. They do it because they can and because they (at least used to) get away with it. I do not admire them and I do not admire him.

I appreciate that traffic tickets are expensive (particularly tickets in a school zone), that such a ticket would cause the recipient’s insurance rates to go through the roof, that such a driver might be required to attend traffic school, and that there might be other consequences. I understand the desire to avoid those consequences. I understand that he has a legal right to hire an attorney who will reschedule the court hearing until the police officer could not attend.

Since the police officer could not attend, the ticket was dismissed.

When he was talking about this experience, I was nodding right along with everyone else, but on the way home, I started thinking about what he said and who he is. This presenter possesses several certifications (such as a CISSP), many of which require the possessor to agree to abide by strict ethical standards. In fact, (ISC)2, the certifying body of the CISSP certification, issues just such a code of ethics. The relevant portion is listed below:

Code of Ethics Preamble:

- The safety and welfare of society and the common good, duty to our principles, and to each other, requires that we adhere, and be seen to adhere, to the highest ethical standards of behavior.

- Therefore, strict adherence to this Code is a condition of certification.

Code of Ethics Canons:

- Protect society, the common good, necessary public trust and confidence, and the infrastructure.

- Act honorably, honestly, justly, responsibly, and legally.

- Provide diligent and competent service to principles.

- Advance and protect the profession.

Driving 50 miles above the speed limit obviously breaks several of those tenets (even though this situation was probably not what (ISC)2 had in mind). Another problem I have with his crazy driving is that this man appeared to be in his late sixties. Does he have the reflexes necessary to drive like this?

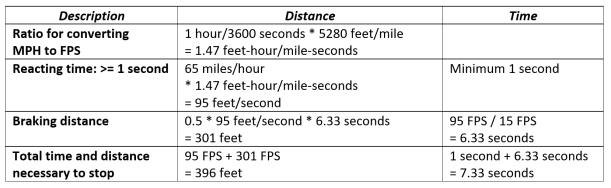

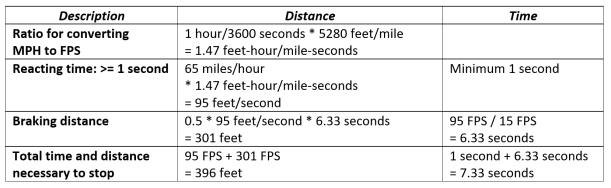

How far does a car going 65 mph travel before coming to a complete stop? According to Government-Fleet.com, “it takes the average driver from one-half to three-quarters of a second to perceive a need to hit the brakes, and another three-quarters of a second to move your (sic) foot from the gas to the brake pedal. Nacto.org states that “… if a street surface is dry, the average driver can safely decelerate an automobile or light truck with reasonably good tires at the rate of about 15 feet per second (FPS).” Let’s examine how that plays out.

To put this in perspective, a football field is 300 feet long from goal post to goal post. A vehicle traveling 65 MPH (given average conditions) will take 396 feet to stop—more than the length of a football field!

The laws of physics apply to everyone. It does not matter how well you drive. If a six-year-old child steps in front of a vehicle traveling 65 MPH, he or she is dead. If the vehicle is traveling 15 MPH, the kid at least has a chance to learn a lesson.

The second thing he said that I had an issue with was when he was talking about how vehicle forensics is now appearing in court cases. As an example, he talked about a case in Texas where a minister regularly connected his phone to the car infotainment center over Bluetooth, which meant that things maintained on the phone such as contacts and photos are transferred to the car’s computer. He claimed that even if a picture is deleted from the phone, it stays on the vehicle computer. When the preacher took his car into the dealership for service, some of the dealership’s service people stole nude pictures of the clergyman’s wife from the car’s infotainment computer and posted them on a swingers’ site as a joke. One of the preacher’s parishioners told him about the pictures being posted. The clergyman and his wife were understandably upset about this and were suing the dealership.

Since I wanted to write an article for this blog about vehicle computer forensics and the amazing amount of information that can be obtained from an automobile’s computer systems, I looked for articles about that incident.

Except the articles I found about a Texas preacher whose wife’s nude pictures were posted on a swingers’ website had nothing whatsoever to do with the vehicle’s infotainment computer. The photos were stolen from the customer’s phone. When I realized that he had twisted the story to fit his theme, I was appalled.

What really happened: A preacher and his wife went to a Dallas Toyota dealership to buy a car. The minister had gotten a preapproval for the loan from an app on his phone. The salesman took the customer’s phone to show the manager the preapproval. While the salesman was out of sight, he found some nude pictures of the wife on the phone and emailed them to himself and the swingers’ site. Then erased the email. The couple were outraged—rightly so!—about this intrusion into their privacy and the theft of pictures of a “private moment.” They hired attorney Gloria Allred to sue Toyota, the Dallas dealership, and the car salesman. You can read more about it here.

A computer forensics professional is required to present the facts fairly and accurately. Given these two stories, would you trust this man to represent the facts fairly and accurately? Would you trust him to act ethically and honorably?

I am asking you to add these New Year’s resolutions to your list this year:

- Drive the speed limit. Drive as if it could be your child, your grandmother, or your dog in that crosswalk!

- Check the accuracy of your information before you give a presentation. Give citations, so that other people can verify your work. If I am in the audience, I will.

- Find your blind spot and change it to something positive.

- Do not allow anyone access to your phone, especially if that person is out of your sight.

Have a wonderful new year!

References

“Driver care: Know Your Stopping Distance,” http://www.government-fleet.com/content/driver-care-know-your-stopping-distance.aspx

“Vehicle Stopping Distance and Time,” https://nacto.org/docs/usdg/vehicle_stopping_distance_and_time_upenn.pdf

“Couple Sues Grapevine Car Dealership Claiming Salesman Shared Their Photos on a Swingers Site,” http://www.dallasobserver.com/news/couple-sues-grapevine-car-dealership-claiming-salesman-shared-their-photos-on-a-swingers-site-8957090

“Texas pastor claims Toyota car salesman stole his wife’s nude photos and emailed them to a swingers’ site,” http://www.dailymail.co.uk/news/article-3994292/Texas-pastor-claims-Toyota-car-salesman-stole-wife-s-nude-photos-emailed-swingers-site.html